Samsung

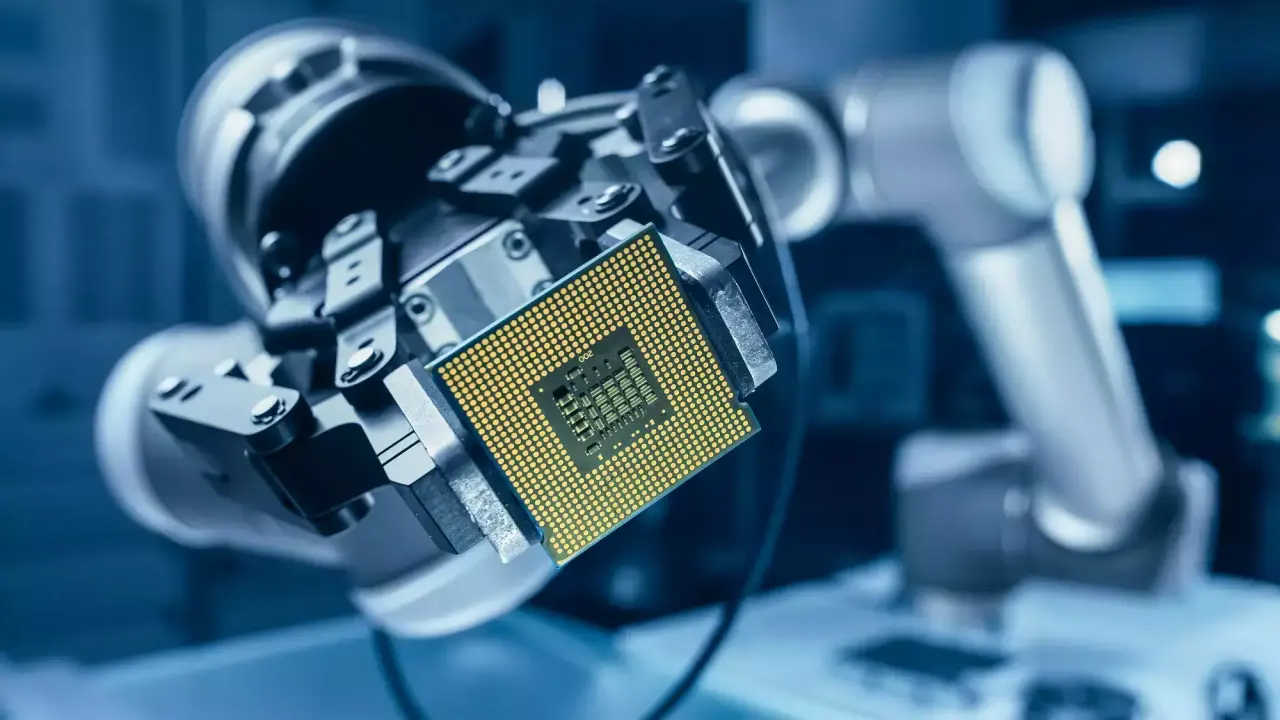

Samsung Inaugurates New HBM Team To Upgrade AI Chip Yield

The Korean Electronics firm prominently has an HBM team within its memory division just to upgrade the production efficiency of their upcoming AI memory (HBM4) as well as the AI accelerator (Mach 1).

As per the reports of the Korea Economic Daily (KED), it is revealed that an HBM team of Samsung is behind the research, development, and sales of DRAM and NAND flash memory. Samsung took the initial step for HBM back in January; from then to now, this appears to be the second team focused on HBM.

This new HBM team will work under the leadership of Hwang Sang-joon, Executive Vice President and Chief of DRAM Product and Technology at Samsung. If the reports from KED are to be believed, then the Korean giants are looking forward to surpassing SK Hynix, which is leading the HBM industry.

Back in 2019, the Korean giant’s HBM team vanished just because of a silly mistaken belief that the market would not see significant growth. Looking at the previous TrendForce press release, the three major original HBM producers held industry shares last year, which are as follows:

- SK Hynix with 46-49%

- Samsung with 46-49%

- Micron with 4-6%

Samsung, for supremacy in the AI chip industry, is now accepting a “two-track” strategy by coincidentally producing two cutting-edge memory chips, which are HBM and Mach-1. Earlier this year, SK Hynix took the lead in getting HBM3e memory validated by customers. On the other side, Micron appears close behind and predicts having their HBM3e goods ready by the end of the first quarter.

This coincides with the plan of Nvidia to announce their H200 products, which likely use HBM3e memory, by the end of the first half. Although Samsung seems a bit behind in sample submissions, it is expected to complete its HBM3e validation by the end of the first quarter, with shipments rolling out by the first half of this year. Apart from all this, it is also reported that Samsung is also setting up to develop the next-generation accelerator, “March-2,” tailored for AI inference.

Samsung

Samsung TV Plus Expands with US Sports, Music, Family & Entertainment

Samsung Ads made a two-pointed pitch at the IAB NewFronts to consumers and advertisers to enhance their experience.

The Korean brand ‘Samsung’ is now offering new premium content to its free streaming service, Samsung TV Plus,across various genres for consumers. On the other side, Samsung Ads is using artificial intelligence just to offer improved ad targeting and results. The giant foregrounds its strong market position as America’s first choice for TV and the undisputed global leader for 18 years, continuing to innovate and transform living rooms in central hubs.

Samsung is now setting up to expand its investment in the Samsung TV Plus service with an all-new premium programming partnership. The service, which grew more than 60% year-over-year, announced a suite of major league channels that will deliver live sports from Major League Baseball (MLB) and more. The detailed information is mentioned below.

- MLB: Samsung TV Plus is collaborating with Major League Baseball (MLB) to debut a new MLB FAST channel. Although this channel will not offer live games, it will offer baseball fans weekly game replays, Minor League game replays, game recaps, and other exclusive content.

- PGA TOUR: This will offer total coverage of all things PGA TOUR, with behind-the-scenes programming, documentaries, tournament recaps, highlights, competitions, and so on.

- AHL: Once this report comes into practice, Samsung TV Plus will be the first FAST platform to air live American Hockey League games through the Los Angeles Kings affiliate, the Ontario Reign.

- Formula One Channel: The Formula 1 Channel is the best destination for fans to catch up on all the actions from F1, F2, F3, and F1 Academy races throughout the season, including analysis, replays, and documentaries.

- One Championship TV: The debut of ONE Championship TV will introduce combat sports content to the service. Featuring unique live events on TV Plus, highlights, series, and behind-the-scenes access from the world’s largest martial arts organization,.

Apart from these, for the music enthusiast, Samsung TV Plus is announcing an exclusive partnership with Warner Music, which will boost the approximately 500 existing premium national and local channels already on Samsung TV Plus. Warner Music content will appear in Samsung TV Plus’ recently launched dedicated music destination and will feature exclusive music playlists, for instance, The Drop and Artist Odyssey.

Samsung Ads is stretching its suite of AI-powered performance solutions, Smart Outcomes, with two new solutions, Smart Acquisition and CTV to Mobile, to make advertising smarter with smart outcomes.

Samsung

Galaxy AI Powers Samsung’s Smartphone Sales Surge

Samsung Electronics has disclosed the financial results of its Q1 2024; fortunately, the results are mesmerizing, as the mobile division was upbeat by Galaxy S24 sales.

The Korean brand debuted the Galaxy S24 flagship series in January earlier this year, bringing a slew of Galaxy AI novelties to the phones and older flagship devices. It is reported by Samsung’s mobile and network division that they have won 3.51 trillion of operating profit in Q1 2024, around ~$ 2.54 billion.

It was already reported, again, that the same fact has come into existence: Galaxy AI, a power package of generative AI features, is the key reason behind the sales growth. The brand states that it has decided to bring these AI features to other flagship phones in Q2 2024, but also promises that “it will continue to invest in research and development to further expand and refine Galaxy AI.”

The brand is already all set to debut a bundle of new Galaxy devices at Galaxy Unpacked Event 2024 in July, including the Galaxy Z Fold 6, Galaxy Z Flip 6, the Galaxy Tab S10 series, the Galaxy Watch 7 line, and the Galaxy Ring. Samsung is not the only firm actively working on generative AI features, as AI has become increasingly important in the smartphone industry. Other smartphone manufacturers, such as Google, Xiaomi, OnePlus, OPPO, and more, are also actively looking forward to generative AI facilities.

It appears that the increase in investment also comes after a report that the

Galaxy S25 series would run Google’s Gemini Nano 2 machine learning model. Presently, the current version of Gemini Nano authorizes on-device AI features like recorder summaries and Smart Reply in Gborad.

Samsung

Samsung Unveils Campaign for Paris Olympics 2024

Samsung starts a new campaign to celebrate the Olympics and Paralympics, which are scheduled to be organized in the next few months of this year. The company has named the campaign “Open Always Wins. Through this program, Samsung advertises its brand value of openness with the Olympic and Paralympic Games.

Going into previous company involvement in these events, the Korean giant has always been partnered with the Olympics since the Olympic Winter Games in Nagano in 1998. The company’s commitment to the Olympic movement soon faces its fourth decade of partnership and extends through Los Angeles 2028. Meanwhile, in the Paralympic Games, the company has been invoked since 2006. It has proudly supported the Paralympic movement and encouraged athletes and fans worldwide to share the excitement and inspiration of the Games through Samsung’s transformative mobile technology.

The latest campaign, which is themed “Open, ‘Always Win,’ gives a message about the brand’s belief that openness enables new perspectives and unlimited possibilities that will serve as the heart of its Paris 2024 programming.

In the latest campaign, it shares the stories of team Samsung Galaxy skateboarder Aurelien Giraud (France), ara sprinter Johannes Floors (Germany), and breakdancer Sarah Bee (France) and demonstrates how being open enables you to do extraordinary things.

Additionally, Samsung has also unveiled a brand new showcase, the Olympic Rendezvous @ Samsung Champs-Elysees 125. In this showcase, the company is all set to bring a whole new express to the Olympic and Paralympic Games fans on an epic journey to Paris 2024. In this event, fans can relive the past Games moments with Galaxy, explore the latest innovations, and create long memories with Galaxy AI.

“The entire space is designed to celebrate Samsung’s commitment to openness,” said Jean Nouvel. “The abstract graphic façade captures the reflections of movements and lights that appear and disappear in an instant, while the matte, reflective white mirrors in the lobby invite the discovery of the latest innovations.”

The Olympic rendezvous at Samsung, located at 125 Avenue Champs-Elysees, will open its doors to the public on May 3 and run until October 31 from 10:00 to 20:00 for Monday to Saturday, while on Sunday it will be open from 11:00 to 20:00. In this showcase, there will be a series of special events throughout the summer that will be open to anyone who wants to immerse themselves in the Olympic and Paralympic Games in Paris 2024.